AI Tools in Traditional Tabletop Wargaming

Recommendations:

About the Project

I am tinkering with the AI APIs to find applications for this new tech in traditional Tabletop Wargaming.

Related Genre: General

This Project is Active

So how do we do this?

This will be a high-level description of how my QA Bot works.

Under the covers, ChatGPT and the APIs we are going to use for our QA bot are converting the text we use into tokens, which are representations of the building blocks of our sentences and language. Think of it as translating our language into a format that the AI can understand. Tokens can be words, punctuation marks, or other meaningful units in the text. On average, a token might be roughly equivalent to 3/4ths of a word, although the actual length can vary. The various OpenAI APIs have a limited capacity for the number of tokens they can process in a single request. This limit applies to the sum of input tokens (your prompt) and the output tokens (the AI-generated response).

The model I am using, gpt-3.5-turbo has a limit of 4,094 tokens. That gives us 3,000-ish words. That might be 10 pages of text. That’s great if you are working with OnePageRules but not so great if you are using a 100+ page rule book. We solve this with something called embeddings.

With embeddings, we convert the text in the PDF into numerical representations that capture the essence of the words and their relationships. To answer our questions, we first search for relevant parts of the text using embeddings, and then use the gpt-3.5-turbo API to generate a response based on the text we found. This is not like using the “Control+F” function to find a word in a PDF; we are not just matching characters. Instead, this approach allows us to search for content that is more closely related to the meaning behind our question, providing a deeper understanding of the text.

So here is the process. There are two phases. First we prepare the PDF for use by our QA Bot and then we have the bot itself.

Preparing the PDF

- Divide our PDF into chunks small enough for the API to work with.

- Convert the chunks to embeddings.

- Store the chunks in a vector store.

A vector store is a specialized type of database designed for storing and managing vectors. The numerical representations of our text, known as embeddings, are multi-dimensional vectors. You may be familiar with vectors in the form of X, Y, Z coordinates, which represent points in 3-dimensional space. In the case of text embeddings, the vectors have many more dimensions, allowing them to capture more complex relationships and patterns in the data. This makes vector stores particularly useful for efficiently handling and searching through large collections of text embeddings.

Our QA app performs the following steps:

- Loads the text data from the vector store, creating a searchable database of embeddings.

- Searches the vector store to find chunks of text that are relevant to our question.

- Constructs a prompt that includes our question and the relevant text chunks, and sends it to the gpt-3.5-turbo API.

- Receives the AI-generated answer from the API and displays it to the user.

Clear as mud? It is surprisingly easy to implement if you have a little coding experience, and of course, ChatGPT could even help you write the code, but that is a tale for a different time.

The Story So Far...

As soon as I got over the idea of AGI eventually ending all life on planet earth, I starting thinking of how I could use the new Large Language Model AI stuff in Wargaming.

I am specifically talking about OpenAI’s APIs which are related to that ChatGPT thing everyone is talking about.

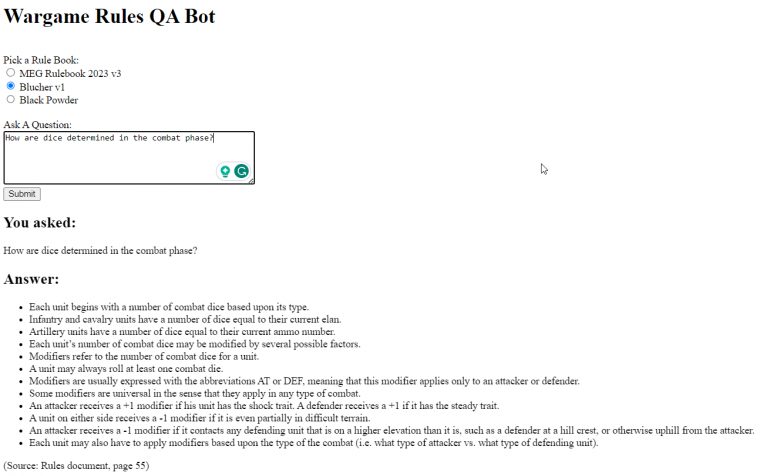

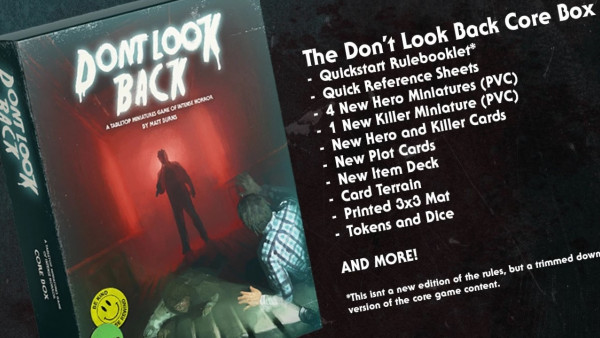

My first idea was using it to QA rules. Many rule books are available in PDF these days. I wanted a chat bot style interface that I could use to ask questions about a given set of rules and hopefully reduce the time spent looking up rules during game play. This is what it looks like as a discord bot:

Discord is great as it gives you a chat sort of interface out of the box.

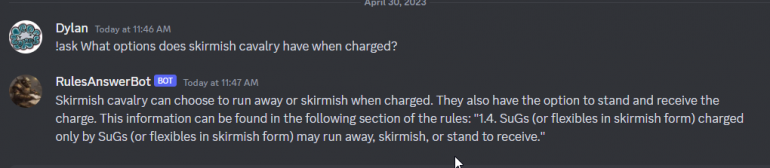

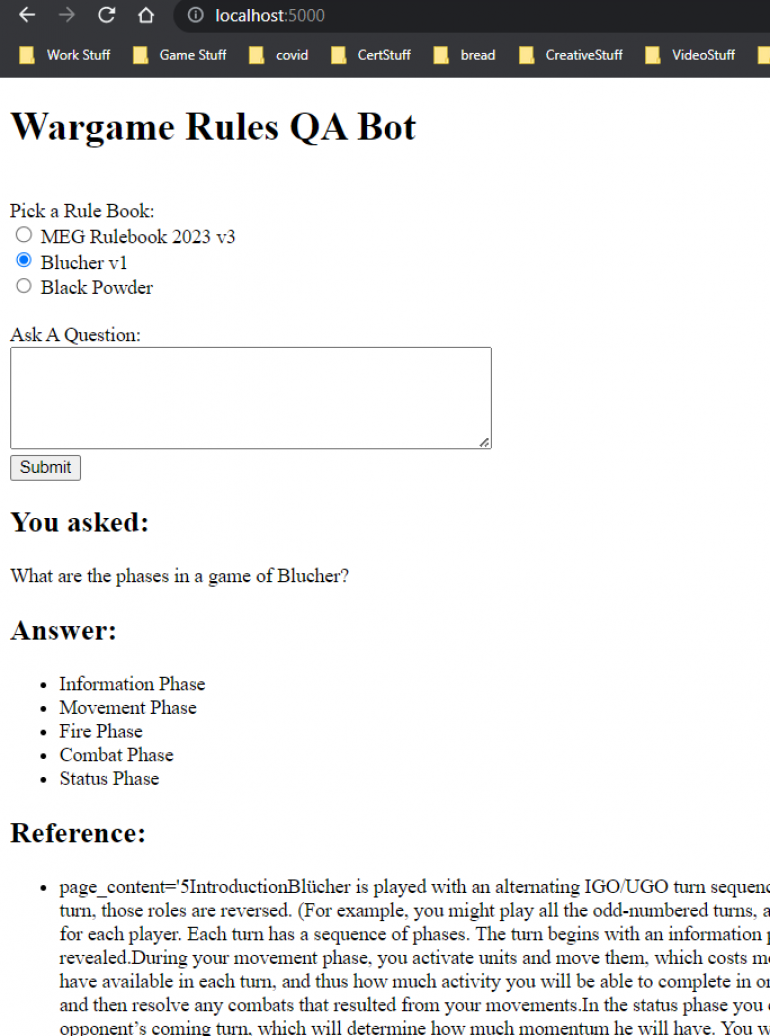

I also set something up as a python flask app (website for you none coders). Keep in mind this is a proof of concept and not a commercial product so go easy on my lack of web design skills. Here is what it looks like:

The web app version allows the user to select a set of rules and submit a question. It returns the answer and the reference text where the API was looking to get the answer.

This is all fairly primitive so far and not publicly available. Making something for use outside my friend group would require a lot more thought about security and cost. There are probably some legal questions too about creating a public product from someone else’s intellectual property, in the case of the author or publisher of the rules.

The actual data behind these POC apps are stored locally in something called a vector store on my own PC. When you submit data through the APIs according to the privacy and terms of use published by OpenAI, they do not keep that data to train their AI. Note that this is not the case for ChatGPT that most people are familiar with! Everything you put into the public version of ChatGPT can be used to train the model (meaning they may keep the data), unless you are using the new private mode. Never put your personal information into ChatGPT!

In my next entry, I will go into how this actually works and maybe another use case I have which is learning wargaming rules by answering AI-generated multiple-choice quizzes.

I am super curious as to other wargaming use cases for LLM APIs anyone else can think of.

If you are a coder playing with this stuff like me, maybe you’ve made your own AI wargame assistant, which I’d like to hear about as well.

![TerrainFest 2024 Begins! Build Terrain With OnTableTop & Win A £300 Prize! [Extended!]](https://images.beastsofwar.com/2024/10/TerrainFEST-2024-Social-Media-Post-Square-225-127.jpg)