Interactive tabletop playing surface (Space Hulk Hobby Challenge)

Recommendations: 402

About the Project

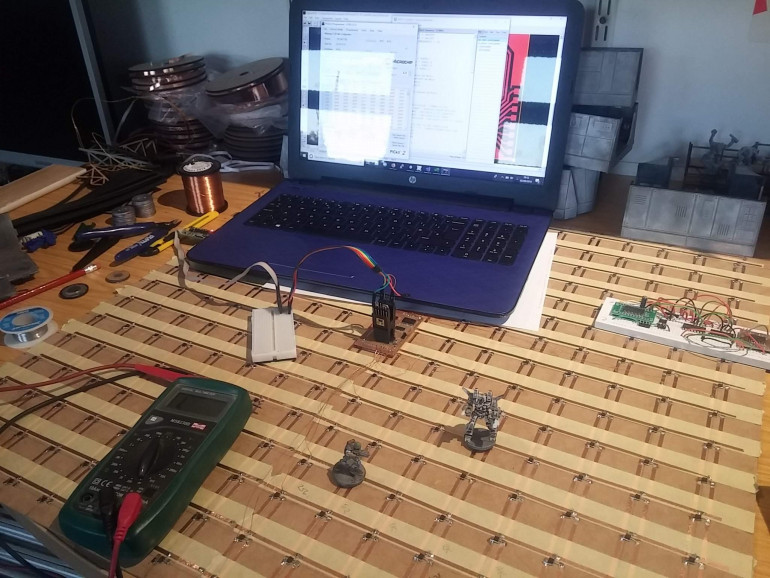

Originally an idea for a two-player digital Blood Bowl game, I am creating a generic interactive playing surface - allowing players to hook up their tabletop miniature games to a tablet or smartphone. This would enable players to compete against a simple AI-based opponent. Excitingly - and inkeeping with the new Space Hulk video game, allowing players to play as either Space Marines or Genestealers - it would also allow two players to compete against each other, over the internet. Work on the underlying technology has been a hobby project for a while. The latest version allows anyone to use the system with their existing miniatures, using nothing more than a simple disc magnet. This competition encouraged me to get my finger out and actually complete the hardware and make a workable, two-player game, to demonstrate the potential of such a playing surface.

Related Game: Space Hulk

Related Company: Games Workshop

Related Genre: Science Fiction

This Project is Completed

Online Space Hulk

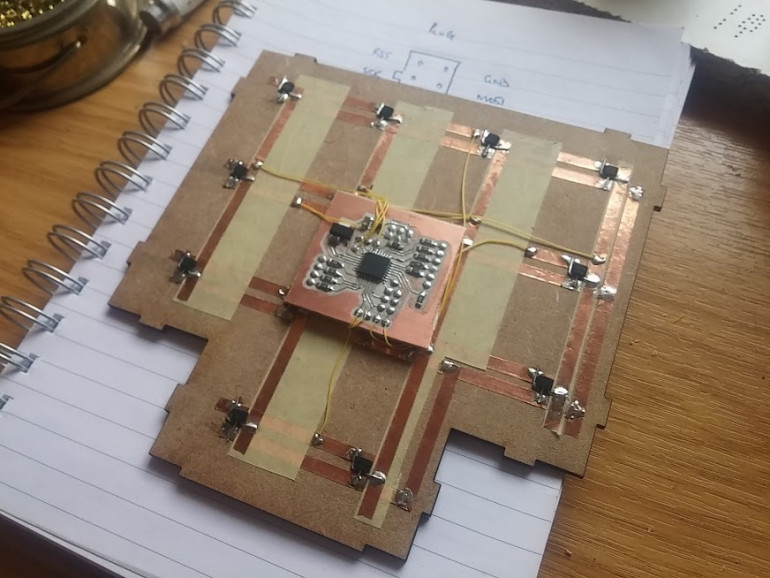

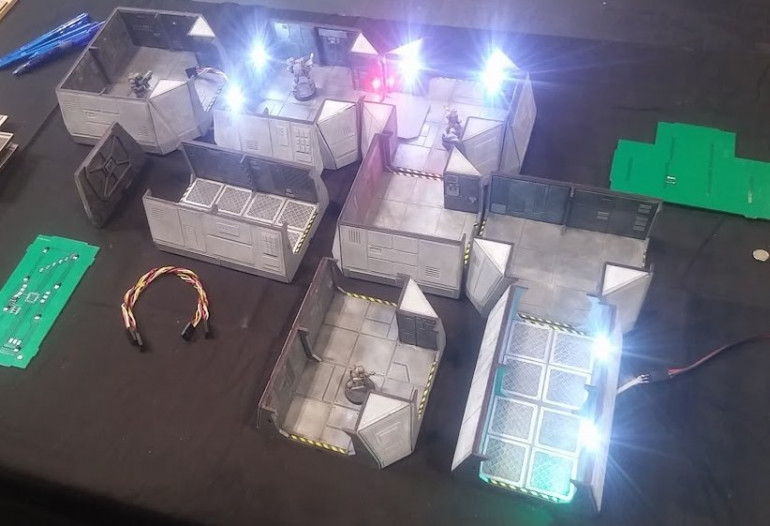

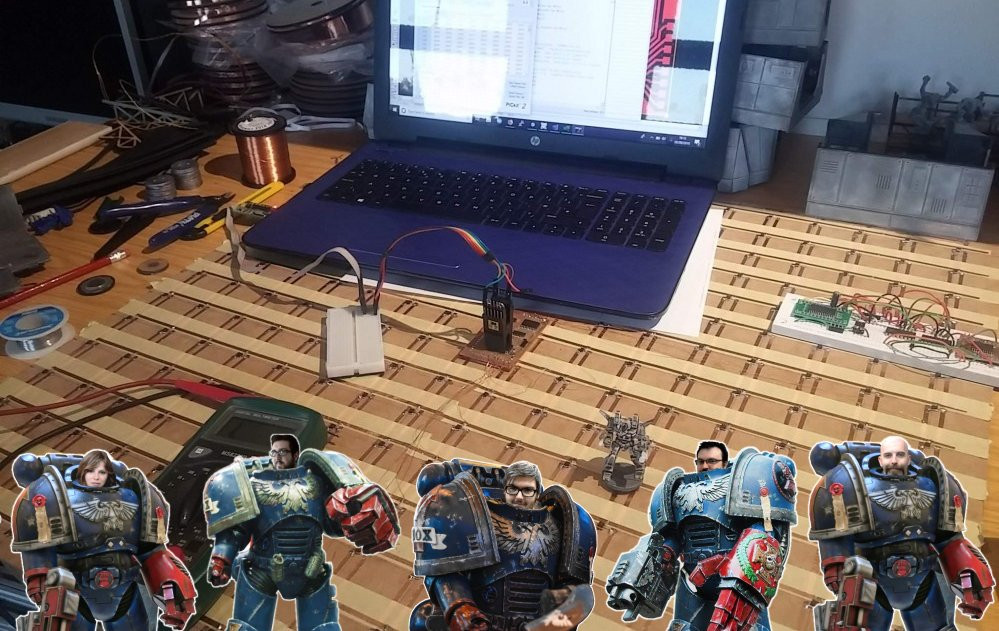

It started out as a series of interconnected terrain pieces, in a Space-Hulk style. Each piece had an embedded microcontroller and an array of hall sensors to detect the presence (or absence) of a miniature above it.

Any miniature could be used, from any range, at any scale. Simply place a neodymium disc magnet into the base, and the miniature can be detected when placed above one of the many sensors in the terrain. Because the sensors are entirely contactless, it even works through different (non-metallic) terrain types.

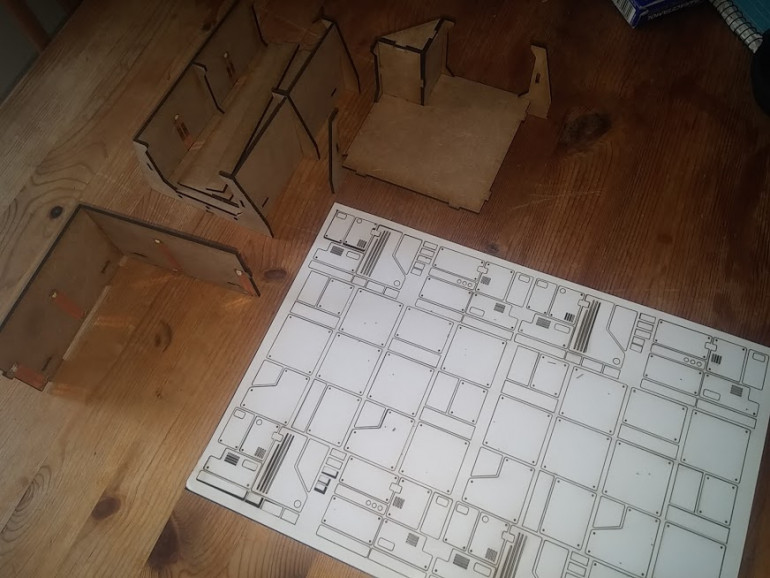

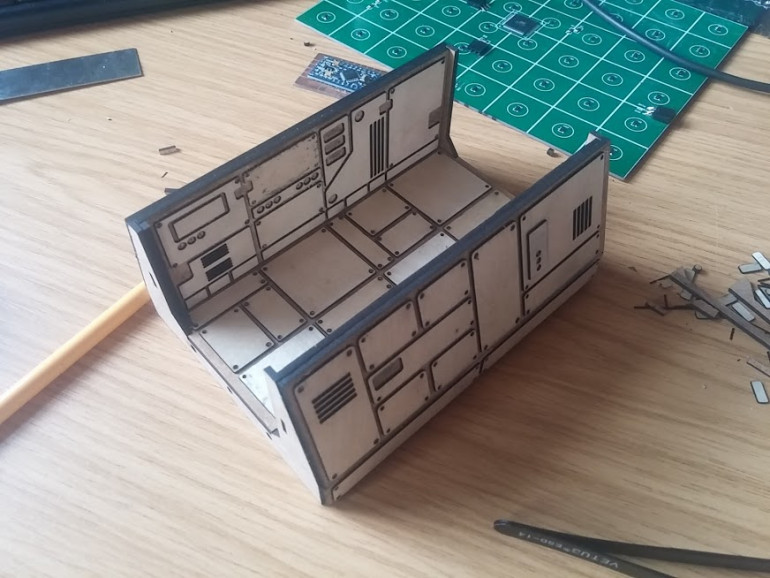

Laser cutting scenery

Laser cut walls and floors are relatively easy to create, but often look a bit “blocky” and boring once assembled. So I spent a lot of time cutting out loads of cardboard and thin veneer panels to decorate the terrain pieces.

Each “room” for the Space Hulk ship was based on a 4×4 grid. Corridor sections are 4×2 (a 4×4 square with the top and bottom rows removed). T-junctions are a 4×4 grid with the entire top row and the bottom left and bottom right squares removed.

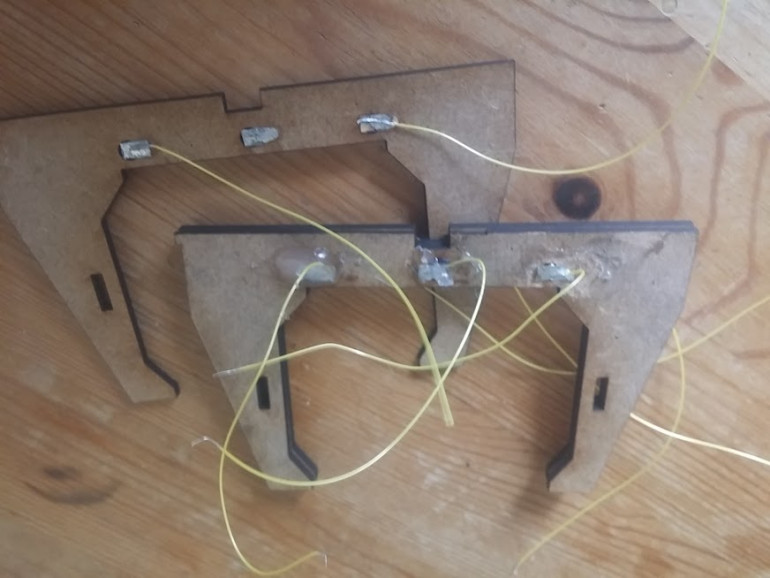

Piece connectors

At every edge of every terrain piece are doorframes, into which a three-way connector is added.

Having just three connectors is important, because we can run power/ground on the outside and keep the centre pin for sending/receiving data. Obviously, using four pins (power, ground, TX, RX) would have been much simpler, but then, at some point, we’d end up plugging a TX into an RX, if the pieces were rotated.

It was important to be able to keep to a single-wire system. I used tin-plated steel for the actual connectors, so that wires could be soldered to them, and they could still be joined using small magnets (soldering directly onto a magnet can sometimes cause it to lose its magnetism, as they are easily damaged through direct heat).

Scrap it and start again

I even added some LEDs to a few of the terrain pieces and could turn them on and off as pieces moved around the rooms. It all looked really impressive.

But it felt like a lot of work, with the door connectors (sometimes a little intermittent unless they were perfectly aligned) and the LEDs – although pretty cool-looking – were little more than a gimmick.

Given the amount of time and effort required to create each individual piece, it seemed a bit of a shame that the whole hardware couldn’t be re-used for other games – should I want to revisit my original idea of a digital Blood Bowl board, a lot of the technology I’d developed would be redundant.

There was only one thing for it….

Scrap the lot and start again!

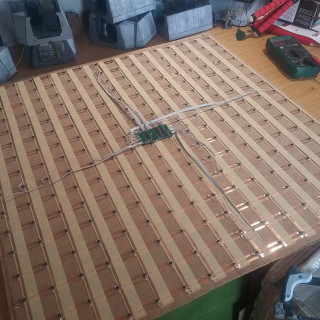

Large format multi-purpose skirmish panels

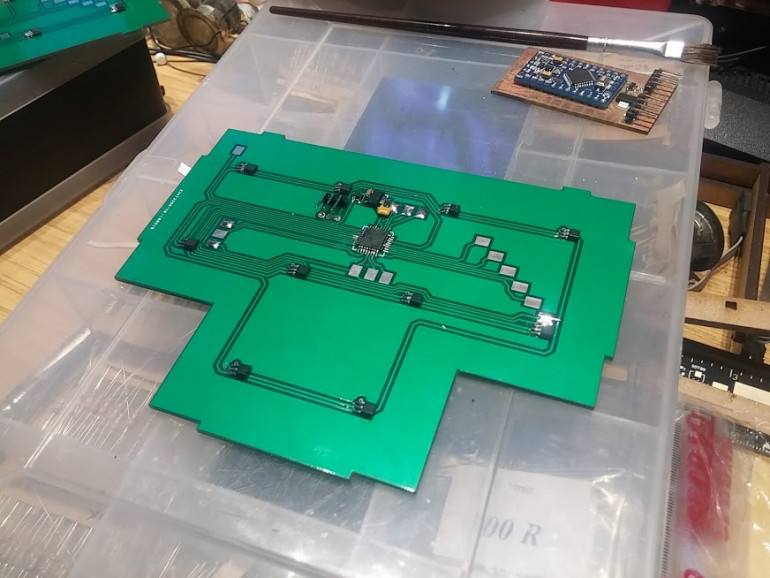

Originally I made my Space-Hulk-alike dungeon from multiple sections, each with a manufactured PCB in the base (PCBWay does relatively cheap circuit boards and even cut them to shape at no extra cost).

Each piece was not more than 140mm x 140mm.

My new, multi-game panels are going to be 610mm x 610mm (simply because thin mdf sheets are readily available in 610x1220mm so are easier to cut to size). But the price per PCB for a massive 2ft square is about £45 per circuit board! That’s before shipping, before tax and import duty, and unpopulated (i.e. not including electronic components). That’s just too spendy!

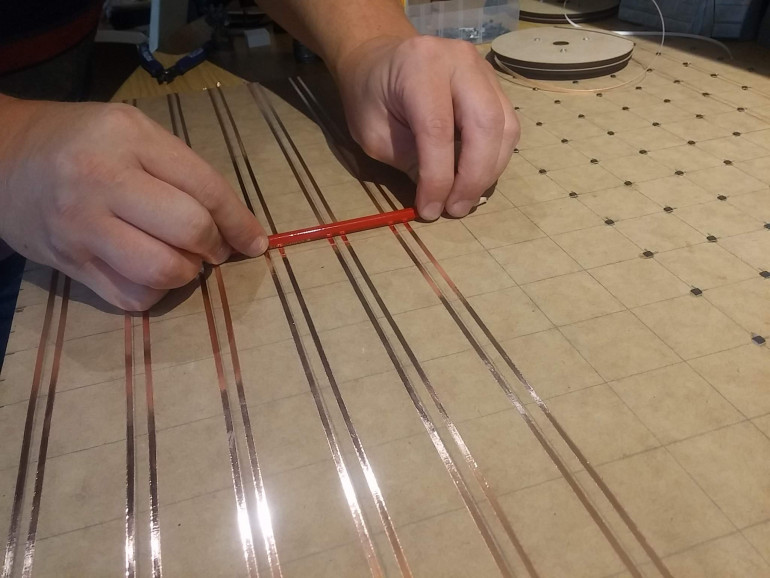

Using strips of copper tape (and masking tape to avoid unwanted shorts in the circuit) and some locally sourced mdf I managed to build a base ready for my hall sensors for about £3.00 all in. At least the project was starting to look feasible once more!

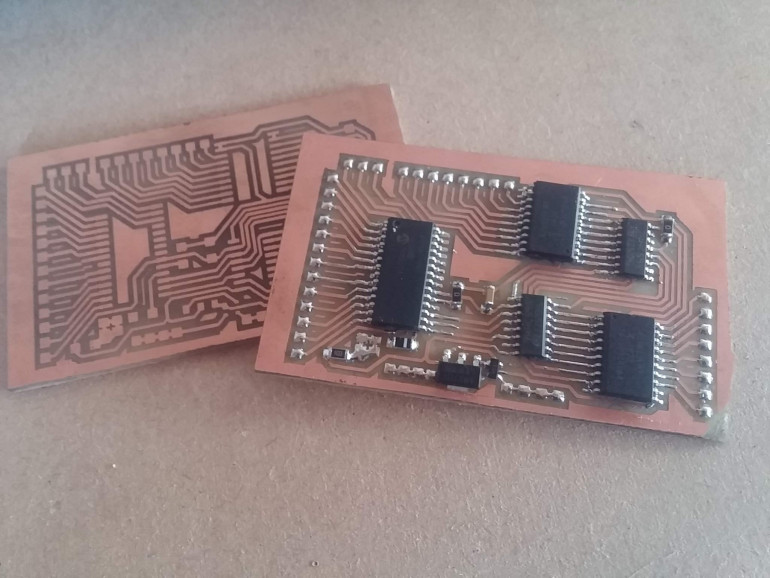

Etching your own PCBs at home isn’t as difficult as you’d think.

Simply print your (mirrored) design onto magazine paper (the cheaper the better) and iron it onto some copper clad board. Unless, of course, you have (or have access to) a laser cutter. Then you spray the board with matt acrylic paint, and etch away the gaps between the traces.

After throwing the whole lot into some warmed-up Ferric Chloride, it’s just a matter of waiting. I measured it as “the time it takes to make a drink a brew with two digestives”. About 15 minutes.

Once etched, some steady-hand soldering is no more difficult than getting the eyes right on one of your Cadian troopers – sure, it’s tricky, but not impossible.

My original multi-part dungeon used an Arduino in the centre of every single piece. Because the entire map was based on 4×4 sections, this meant that the microcontroller didn’t have to do too much work.

On these larger format panels, there’s a massive 16 x 16 grid. That’s 256 sensors! A simple 8-bit Arduino simply isn’t up to the job on its own. So I went back to my preferred platform and used one of Microchip’s PIC microcontrollers, a couple of shift registers, some current source drivers, and a lot of multi-plexing trickery in code.

To date I’ve got the panel reporting the square number whenever a miniature (with a magnet in the base) is placed (or removed from) a square in the grid of sensors. Now to get the data into some kind of app…..

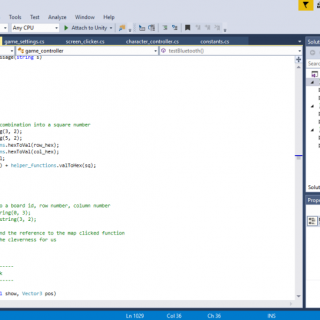

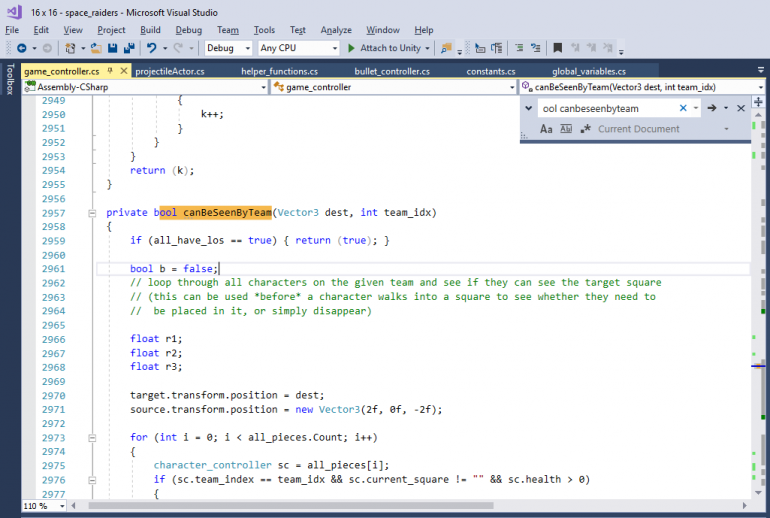

Coding is no spectator sport

With the hardware built and tested, the long, arduous task of building the accompanying app is ongoing. Coding doesn’t make for much of a spectator sport – it’s just screenfuls and screenfuls of gibberish (unless you, yourself, are a coder, then it’s full of dodgy functions and routines that “aren’t how I would have done it”).

But while the coding is ongoing, there’s still plenty to do on the tabletop.

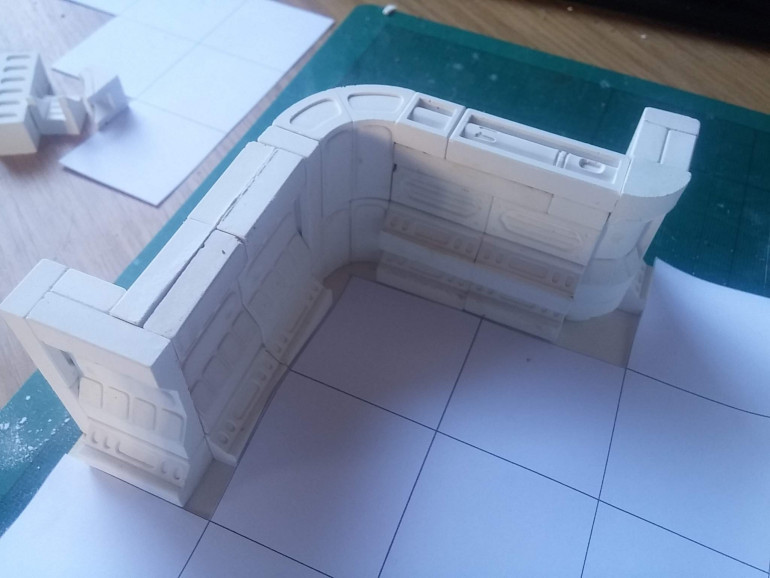

The hall sensor array can detect neodymium magnets from a distance of about 5mm – 8mm away. As the sensors are placed on the underside of some 1.5mm thick mdf, when the whole board is flipped the right way up, it means we can detect playing pieces when they are placed on the board, up to around 6mm away.

This means that we can place not just a printed sheet of paper or card, to represent our playing area, but actual terrain too!

I’ve had a selection of Hirst Arts moulds for a number of years and a few bags of dental plaster (Whitestone) left over from some product prototyping a while back. It didn’t take long to cast up and knock out a few different types of walls for some sci-fi terrain.

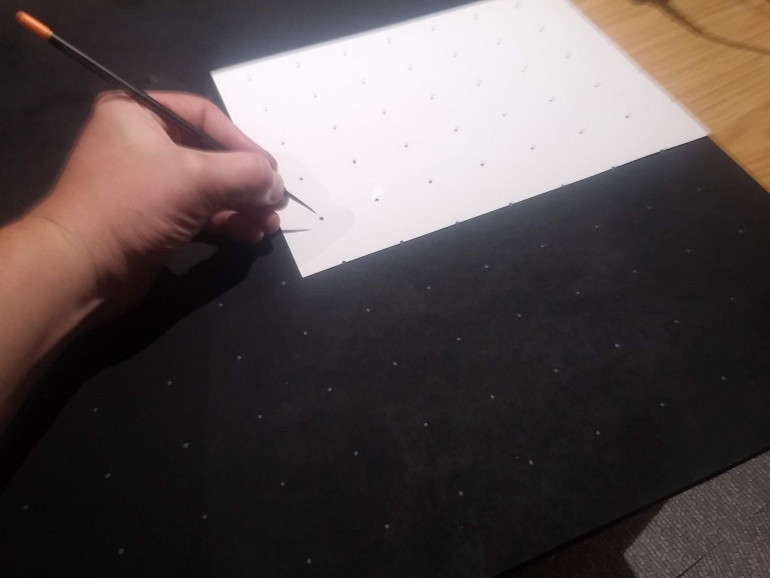

I decided that floor tiles might be a little thick, so mounted the wall pieces onto some 1mm thick “mountboard” (the kind of card used by picture framers to mount an image in a frame) and primed in black.

The Hirst Arts pieces are built for a 1″ square grid, and my sensors are placed at 1.5″ centres – but many of the wall pieces are half-an-inch thick, meaning they can be placed at the end of a one-inch section to create 1.5″ pieces.

Alternatively, three Hirst Arts pieces placed next to each other create a 3″ section – the width of exactly two of my sensor squares.

Determined to get the pieces completed this side of Xmas, I favoured a quick drybrush and picked out just a few panels on the wall sections.

Floor panels are to be laser cut from some 300gsm card, drybrushed and glued to the floor of each terrain section.

It’ll be a couple of days before I can fire up the laser cutter, so until then, it’s back to the computer, to get coding…..

Here come the girls (girls, girls, girls)

Coding is taking up an extra-ordinary amount of time at the minute. To date I’ve got the hardware working (detecting miniatures as they are placed in each square on the sensor array) and data being sent back to a smartphone/tablet over bluetooth.

All very tech-y and pretty nerdy stuff.

But this isn’t a hobby-electronics project, it’s about making interactive Space Hulk. Which means we need some Genestealers.

So inbetween building and coding and painting, I hit ebay and bought some (poorly painted) second-hand tyranids. I figured that’s close enough to Genestealers, isn’t it?

This project is probably going to go right to the wire (when *is* the closing date, anyway?) so there’s not an awful lot of time to spend on painting miniatures. While in my mind’s eye, any new miniatures always look super-cool with multiple levels of blending, super-precise airbrushing and immaculate detail, the truth is these genestealers are going to get little more than a drybrush of blue over a black base, some flesh/purple on the hands and face and the simplest of basing.

Even just setting up the airbrush seemed like a lot of hassle.

So I grabbed a tin of Halfords Matt Black primer and in just a few minutes, had the whole hoarde base coated. I forgot to open the windows in my workshop, so think I need a lie down now – feeling a little light-headed!

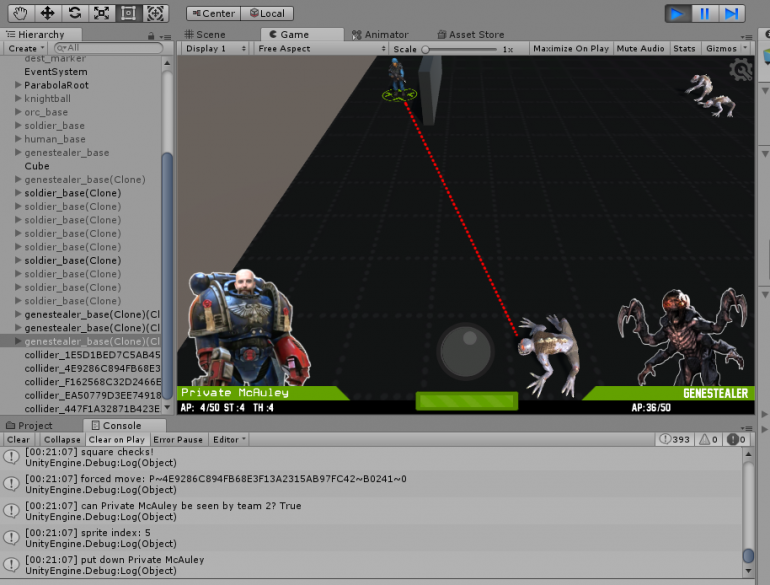

Unity Genestealers

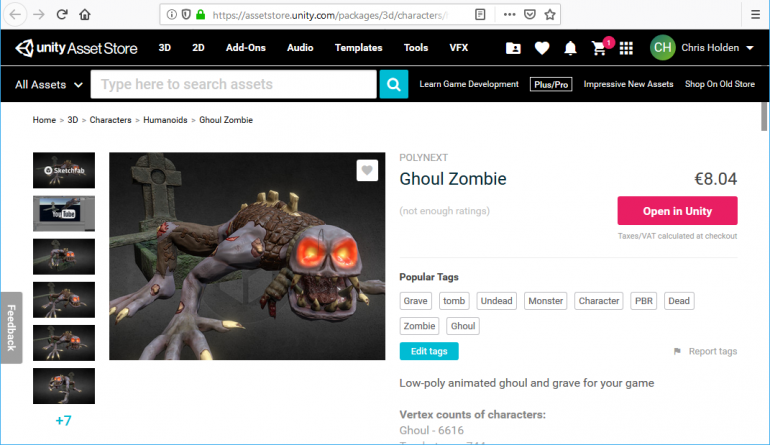

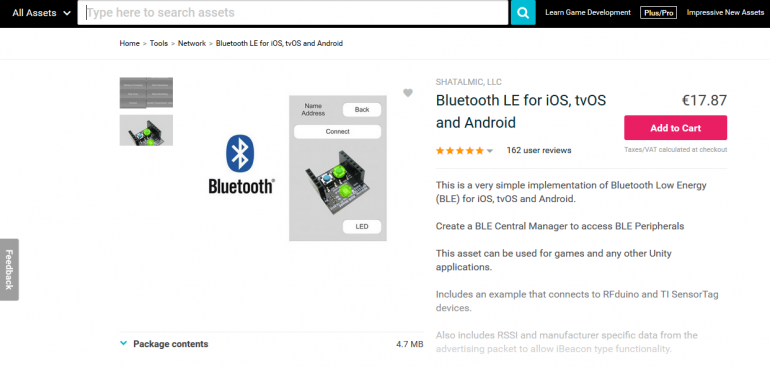

The Unity Asset Store is a great place to buy digital content – not just for Unity but for programming and games development in general. It just so happens that I decided to use Unity to do my games dev after all.

It has a fantastic cross-platform compiler – meaning one code base can be used to create apps for both iOS and Android (as well as Windows, Linux, Mac, even XBox and Playstation!) and it also gives you access to loads of ready-to-use content (such as 3d models, camera shaders, interface designs etc).

I’ve used Unity for a few non-game projects, almost always as a way of providing a smartphone interface to custom-made electronics hardware, via the excellent Bluetooth LE library (https://assetstore.unity.com/packages/tools/network/bluetooth-le-for-ios-tvos-and-android-26661)

Normally I use Unity only for “flat screen” interfaces, so it’s been quite a challenge learning how to use it’s 3D games engine (and then learn how to ignore 95% of it, and write my own code to control the on-screen characters in response to commands from the hardware).

The Asset Store is a great place to find rigged and even animated characters for your Unity games, but, unsurprisingly, there aren’t any GW Genestealers readily available. I guess this is mostly an IP/copyright issue, so I did think about having a go at creating a Genestealer model and rigging it.

After a couple of wasted evenings I decided that

- a) my 3D modelling sucks

- b) animating is really, really hard

so I deciding to use a pre-existing character, even if it’s not a 100% match. The closest thing I could find was the “ghoul zombie” (https://assetstore.unity.com/packages/3d/characters/humanoids/ghoul-zombie-14477)

I particularly like the “climbing out of a tomb” animation- when set in a sci-fi/spaceship setting, this could look really cool, as the creature climbs onto the space deck from a hole in the floor.

But it’s not quite “Genestealer enough” for me.

The model and the animations, I’ll have to live with. Luckily, assets from the Unity Asset Store come with all the materials and textures that make up the creature, which are easily editable in GIMP or Paint Shop Pro/Photoshop. A few minutes with the clone brush and a bit of colour shifting and I think I’ve got my “genestealer-ish” character for the tablet/digital version of the game….

(footnote: the characters are deliberately “low-poly”, not just because of cost – everything in the asset store has a price tag! – but to ensure that the game will run across the widest range of devices. Once I’ve created one set of working hardware, I need to create another, to enable across-the-internet play – and I’ve no idea how old/modern the device my opponent will be using is. By sticking just to low-poly models, that run on even old iOS/Android devices, the greater the chance of it “just working” without requiring a super-fancy graphics card or the lastest CPU/GPU combination).

How the sensor array works

As development continues, there’s not really much to show on that front, other than a screenful of Visual Studio compiler errors, crash reports and a big pile of hair on my desk, where I’ve been pulling it out for four days.

But there’s more to this project than just coding (would that it were).

I’m hoping to get a video showing the hardware in action in the coming days, but in the meantime there’s been a few queries about what exactly “the hardware” does. It’s basically a grid of hall sensors and as each one is triggered (either activated or deactivated) it sends a message to the game/app via bluetooth.

Here’s a simple demonstration of how hall sensors work; different sensors work on different gauss strengths (i.e. some are more sensitive than others).

Each sensor is connected to a power source (in this case, a simple 3v battery) and ground. As the magnet comes close to the hall sensor, the third leg also gets pulled to ground; if the video I’m using this to make the LED light up. In practice, this leg is connected to an input pin on a microcontroller to create a digital signal (so we can tell when the magnet has been either put close to the sensor – LED would light up – or when it’s been moved away – the previously lit LED goes out).

Now that’s fine for one single sensor.

We’ve got a grid of 256 of them! (that’s a 16×16 arrangement). Even the largest microcontrollers don’t have 256 inputs (and those that do don’t have the necessary pull-up input resistors built in). We need to use a different technique to read the array.

Multi-plexing is the process of reading a grid of sensors (or activating a grid of LEDs if you’re using the output of a microcontroller) in a line-by-line fashion.

The power for all of the sensors are connected in columns. All the inputs from a single row of sensors are connected together. When we get a signal from, for example, input row three, we then look at which column we’ve activated.

By comparing the currently active column and seeing which input row has created a signal, we can work out which individual sensor triggered the signal. By splitting the matrix down into 16 rows and 16 columns, we’ve reduced the number of required microcontroller pins down to just 32.

Luckily, microcontrollers work really, really, quickly.

So we can “scan” through all 16 rows tens – if not hundreds – of times a second; certainly fast enough to provide a timely response when the player places or removes a magnet immediately over a sensor.

Now all that remains is to create a message to tell our app which particular sensor has detected the change in presence of a playing piece. The easiest way to do this is using a serial-to-bluetooth module. These are readily available, easy to work with and relatively cheap (maybe £3 from eBay).

Simply connect one to the RX/TX lines of the microcontroller and now, when we send data over serial/UART (a relatively simple task for anyone with basic electronics/Arduino/microcontroller experience) the message appears in the app, thanks to the Unity-bluetooth code library.

A bit of hocus-pocus and some code bashing and we can control the characters in our app/game by moving playing pieces over the top of the sensor array. Just a little more debugging and a video will follow very soon……

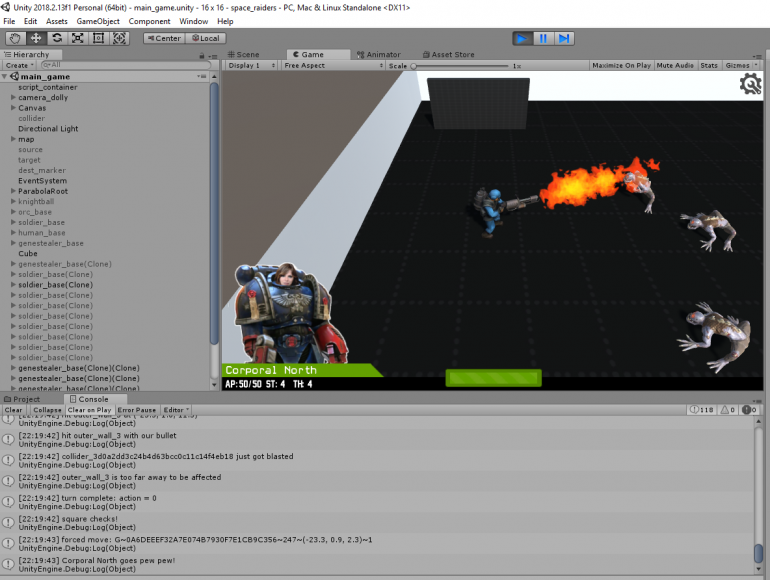

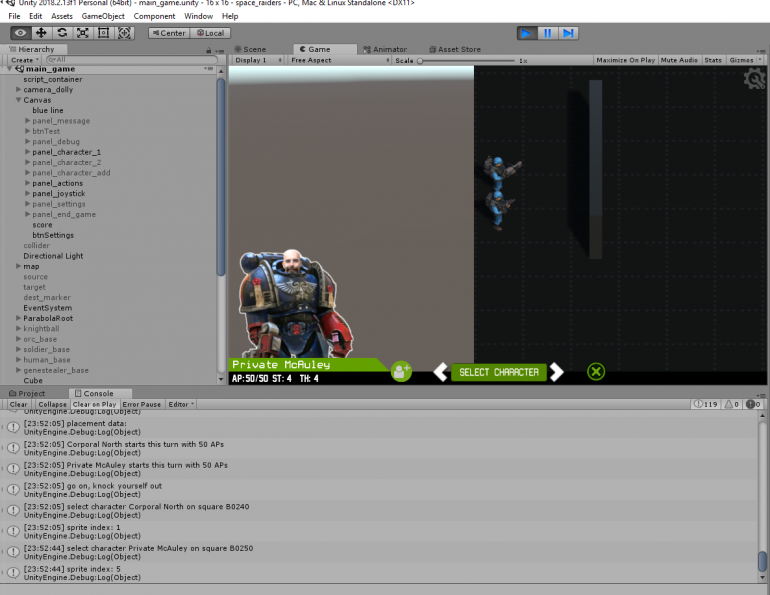

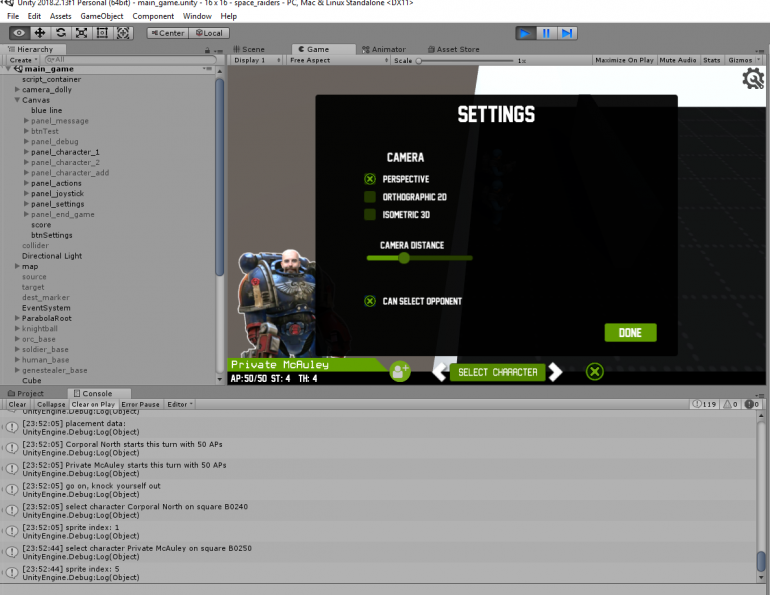

Creating games in Unity is just too much fun

Unity is both frustrating and wonderful to write games on.

For simple, pick-up-n-play mobile apps, it can be brilliant. But for a game like this, where the player selects an action (in our case it just so happens to be by picking up a playing piece and putting it down on some dedicated hardware) and then the responses are played back exactly, it can be pretty frustrating.

Of course, once one player has taken a turn, it’s important that we can record exactly what happened, and in what sequence, so that it can be played back on the other player’s device, when it’s their turn to play.

So far getting this exactly right has gone from a labour of love to a headache and a chore!

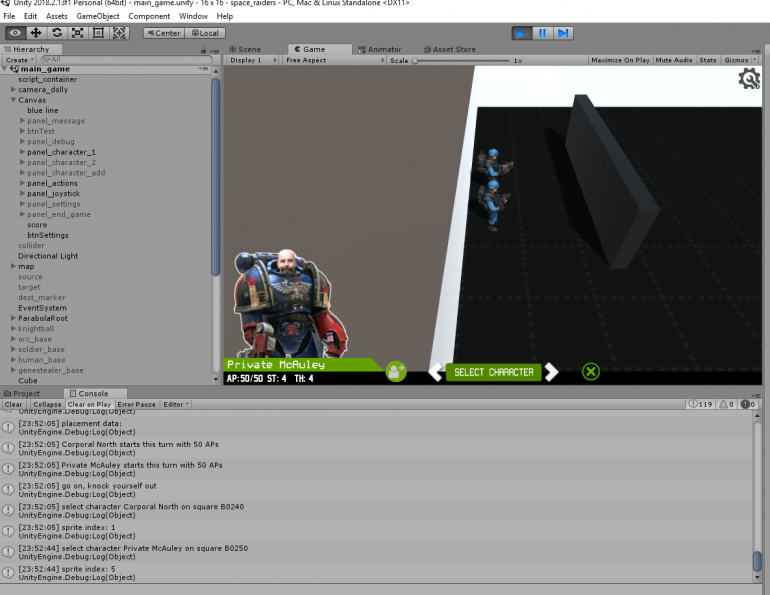

I’m using the Unity Toon Soldiers (https://assetstore.unity.com/packages/3d/characters/humanoids/toon-soldiers-52220) as proxies for my Space Marines (ssshhh, don’t tell GW, I’ve heard they can get a bit upset at players using proxy models).

These great little characters come with a selection of weaspons – handguns, assault rifles and so on. With a little creativity, it’s easy enough to swap out bullets for lasers, to bring them up into the 41st century.

But one thing I’ve been having a lot of fun coding up are weapons that behave very differently to “regular bullet-based” ballistics. Like flamethrowers. With “real work” and other things, finding time to work on the project is hard enough as it is. But when I do finally get a few hours to write code, I find myself giggling at the Beasts of War crew dressed up as Space Marines, burning everything in their path on my sandbox test system!

Sure, like every software project, this thing is dangerously close to missing the deadline because of “mission creep”. But when you’re spending hours and hours trying to solve parabolic equations (those grenades don’t just appear on the map you know!) even the smallest of things can bring a little light relief.

I really must knuckle down and get the actual gameplay sorted out.

So far things are looking pretty good on the computer screen – one last push and it should be on a smartphone/tablet in the near future….

Not just hardware and software, but web development too

The scale of this project has ballooned quite dramatically in recent weeks, but, having been featured in the weekend roundup was quite inspiring, so I got a bit of my mojo back and pushed on.

I might even have to take a few days off work as the deadline looms — soooo much still to do (and still I can waste hours just burning toon versions of space aliens with a flame thrower, all for absolutely no purpose whatsoever!)

Of course there’s a lot of work building the electronics but, as an electronics engineer, that’s the least part of the build for me! The game/app development is proving to be a big drain on time, but there’s also a lot of time being spent building tools that nobody will ever see…

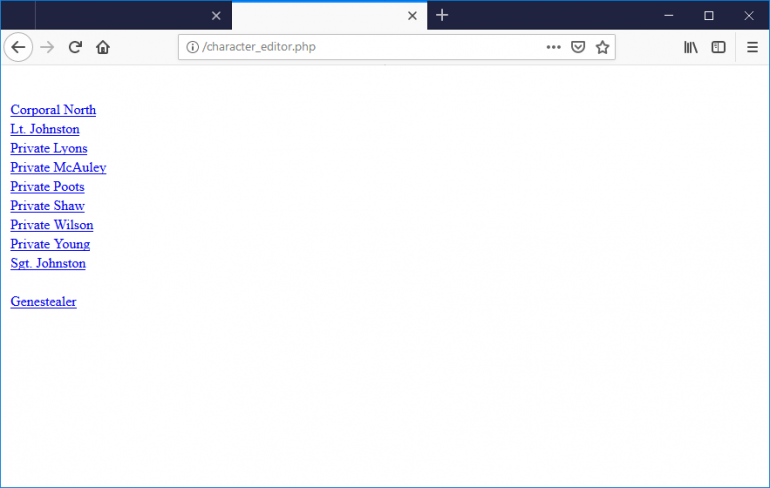

An online character editor, for example.

Because if you’re going to play this game over the internet, then both players need to be able to access a common set of data; it can’t be stored locally (where nefarious types might hack into the relatively simple data structures, to give their own team an unfair advantage!)

Yep, it’s fugly, but it works. A simple online editor lets me set parameters about each of the teams playing – from weapon types to headgear to what type of armour they’re wearing. In time, it’d be nice to get a proper UI built around this, and a log-in system so different players could manage their own teams online.

Unfortunately, it’s unlikely that this will all be live by the end of the challenge date. So for now players just have to play with teams that I build. I just hope all that power doesn’t go to my head…..

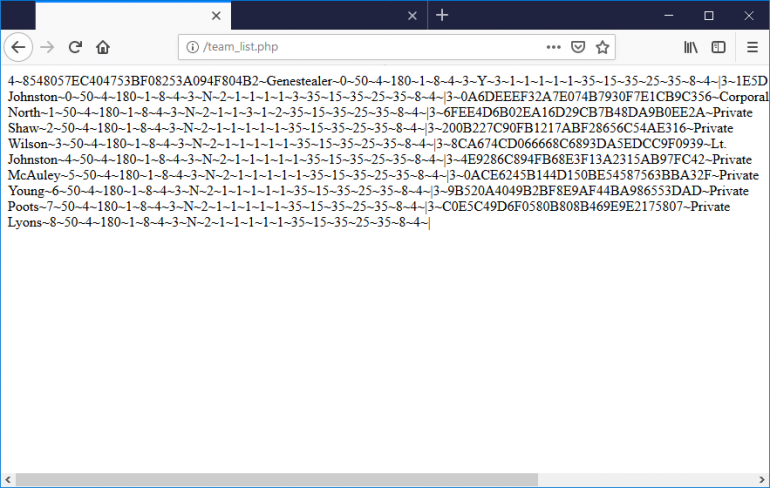

The reason for the extra effort to build an online editor? Well, basically, because my game app uses a really crude data structure – and it mostly looks like gibberish. Anyone who’s ever worked with computers and data transfer probably recognise it as something from the late 80s – hastily delimited strings and nasty pipe characters everywhere.

Hey, don’t judge my code. It works, ok? 😉

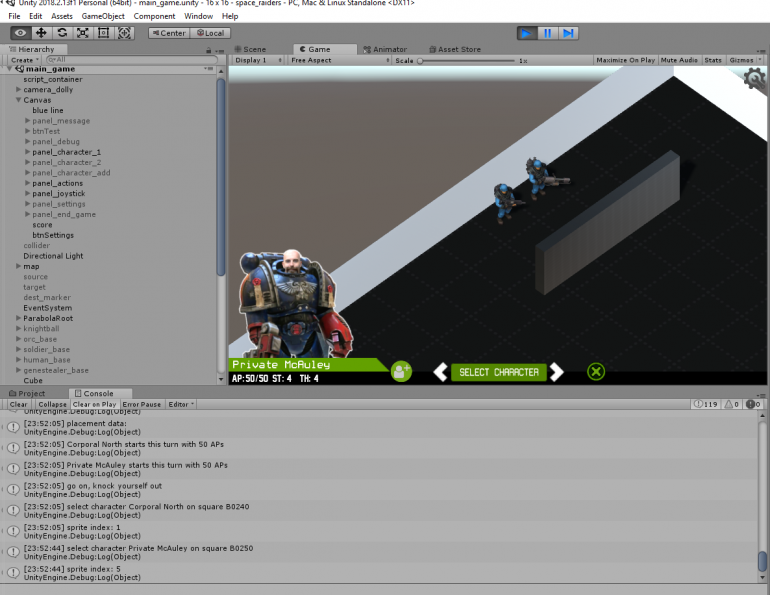

Messing about with cameras

Yes, I know. The deadline is looming and there are plenty of other things that need to be getting done.

But hour after hour of debugging code can get pretty disheartening – especially when stuff you thought was working and put to bed suddenly breaks because you’ve tried to add in a couple more variables to make the code more “modular” and re-usable (object-oriented fans know what I’m talking about, right? Sometimes I think I should have written this in QBasic…)

Anyway, sometimes it’s nice to spend an hour and just make something that works. And can’t be broken, no matter how much extra code you throw at it.

Thanks to the way Unity uses a “main camera” it’s really easy to offer the player multiple viewpoints, without screwing up all your trigonometry (that actually makes the game work).

Better still, it doesn’t require any tricky matrix multiplication or translations (as would be necessary if you wanted to implement different points of view to your own sprite-positioning code). Just set a couple of values and let Unity take care of things for you.

It's only a simple settings screen. But, thanks to feature creep, expect this to balloon in the coming days....

It's only a simple settings screen. But, thanks to feature creep, expect this to balloon in the coming days....A simple settings screen lets us choose between three main different types of camera. We’ve already seen the default “perspective” view. But there’s something quite comforting about going “old school” and drawing the sprites “flat” onto the screen. Like an old Nintendo or C64…

And for those of us who used to love games like Head over Heels and Gunfright on the old ZX Spectrum, there’s even a “fake” 3D isometric view too!

Hey, I know none of this actually improves the gameplay. But just right now, I need to claim a few simple victories, just to stay inspired 😉

That stupid looking block, right in front of the two main characters? Yeah, I spotted that too. It’s there for a reason. You see, unlike most tabletop games, because players can play this against each other remotely, there’s no need for them to see their opponents pieces, is there? So the big ugly brick wall is just an early test piece for line-of-sight and hidden movement….

Editable custom art

“Isn’t Sam a little… short to be a stormtrooper?” asked some wag on a recent blog post. I’m not sure. I’ve never met him. Maybe that’s why he always sits at the far side of the table during videos? Like the famous Father Ted caravan scene, he can simply claim “I’m not small, I’m just further away”….

Anyway, I think I fixed it.

And then I thought “I don’t want to fix problems with imagery every time someone pipes up it should be different”. So I extracted the artwork from the app and made it an external resource. To those who don’t bother much about such things, the end experience is no different.

But to those who want to, they can now find the external .png (on their phone/smart device) and edit it, to include their own favourite characters.

Suddenly it made sense!

I’ve painted my Space Marines as Ultramarines. No, not because I haven’t the imagination not to. And no, not because I had loads of blue left over from buying three copies of Warhammer:Conquest magazine a few weeks back for the freebie miniatures (actually, probably a bit of that). I just liked the blue marines.

But Space Hulk is usually played with Terminators (it’s a good few years since I actually played Space Hulk and I’m not sure where in the loft my old miniatures are any more). If I’m playing a two-player game over the intertubes, it’s quite possible that my opponent is using Blood Angel Terminators for their Space Marines and 2nd edition genestealers, while on my board, hundreds of miles away, I’ve got regular Smurfs and Tyranid proxies for my playing pieces.

By allowing both players to edit their own artwork, each can have the graphics in their app match the miniatures on their own tabletop!

Maybe in future there could even be some kind of online image editor for each character too. But that’ll have to wait. I’ve got (yet more) coding to finish off.

As my wife said, “I you think I’m going to sit here drybrushing your terrain because you’ve run out of time, messing about adding stuff that isn’t really important to your bloody game instead of just getting your head down and getting it finished, you’ve another think coming”.

She’s quite supportive like that.

First app testing

Constantly compiling code, transferring to a tablet, testing, debugging, correcting code, re-compiling, transferring back to the tablet and so on is a really tiresome development loop.

So I added a few UI elements to the app, so as a game it can be tested entirely from my laptop (without having to connect the custom hardware as a controller). I had to really consider how the game would play with the actual game board and miniature playing pieces, and tried to keep the app UI as close to this as possible.

For example, littering the screen with buttons would have been easy (and relatively quick to implement). But the app shouldn’t be the focus of the game – the miniatures, tabletop and terrain should. So where possible, having to touch the screen to perform tasks should be kept to a minimum.

That said, by creating a system whereby picking up and putting down of pieces on the game board can be simulated by clicking on the screen has accidentally made the whole game more playable. Because while one player diligently uses their miniatures to interact with the app, their opponent could play entirely onscreen, using the virtual controls.

No more waiting for them to get back to the hobby table to take their turn – they could continue playing, even on the bus ride home!

So what are we looking at in this video?

Well, it’s rough and it’s crude, but it’s functional. It shows two players being simulated – firstly selecting and placing their characters on the board, then moving them and showing how the app responds to things like line of sight and hidden movement.

At first, as the onscreen visual joystick flies around the board, we can see it’s completely empty (bar the big block representing a wall). On the screen, we click a button to change the character we want to introduce to the game. On the tabletop we simply place the miniature we’re going to use onto a dedicated square and each time we put them down, the character the miniature represents changes; when you’re happy with your selection, simply place them onto the board.

After team one has placed their miniatures, their turn is ended. The actions they have taken (characters selected, deployment locations placed etc) are uploaded to the web server.

When it’s team two’s turn, a quick fly around the board reveals nothing. That’s not to say that team one hasn’t deployed their pieces – but because they’re hidden behind a wall, team two can’t see them yet!

Team two uses the same technique to select their characters and places their miniatures on the board. The subtle difference here is that team two is allowed to select multiple instances of the characters on their team (it wouldn’t be much of a game if we could only have one genestealer on the board at a time!)

One of the key features of Space Hulk was “field of view” which I’ve tried to recreate here. Every character has a field of view of 180 degrees (so can only see things in front of them). In fact, each character could have a different field-of-vision if required (so characters with big, bulky armour could have their FOW reduced to 140 degrees, keen-eyed, fast-moving characters might have a full 360 degree field of vision etc).

This means that the facing of a character is important. To activate the “action menu” for any character, simply pick them up and put them back down in the same square – doing this cycles through the action options (and is simulated in the game by selecting a character, then clicking on the square they are standing in).

With the active character selected, and the action “face target” selection, selecting any other square on the board will cause the character to rotate and face the chosen target.

After team two has placed their characters, we upload their turn, flip back to team one and run the app again. The eagle-eyed among you might notice a slight delay between the app running and the turn starting; this is because before you get to play your turn, your opponents previous turn is played out by the computer.

At the start of team one’s second turn, a quick fly around the board shows us that there are no enemies visible. Once again, it’s not that they’re not there – just that we can’t see them (since some nitwit thought it would be a good idea to deploy our characters behind a big wall).

I doesn’t take long, though, for the baddies to reveal themselves… (genestealers are always the baddies, right?). As soon as out intrepid Space Marine rounds the corner of the wall, the first genestealer comes into view and the app waits for us to place the appropriate miniature on the board.

Another step forward and the other enemies become visible, prompting the player to halt the game and place the other miniatures on the board.

This method of interrupting a players turn allows us to implement line-of-sight and true hidden movement. No more chits or blips, or planning your strategy based on knowing where your opponent is (or is likely to appear). If you can’t see them, they ain’t there!

App testing turn two

Now we’re getting into the meat-and-bones (as my old man used to say) of the project. And something that has given me sleepless nights for days (actually, more like had me gazing into the middle distance during Corrie, trying to work out how it should all work, leading the wife to ask “are you sure you’re alright, love?”)

It’s all well and good creating a two-player game from the perspective of a traditional “video game” developer – but in this instance, things are not necessarily happening in real-time.

While I’m taking my turn, you might be having your pie and chips in front of the telly. It’s only once my turn is complete will you get to know about it. And when it comes to your turn, before you can move a piece, you need to update your board – remember we’re playing remotely, on two separate boards, possibly hundreds of miles apart – so everything ends up as it was at the end of my turn (not how you left your board, at the end of your last turn).

So it’s quite possible that two players “go out of sync” with each other, and we need a system of getting everything up-to-date before each turn is played out.

So here I am, playing turn two of the genestealers.

But before I can move my pieces, I need to get your guys into position (after all, you could have played out your turn while I wasn’t actually watching). Space Hulk is a turn-based strategy game after all….

Also, if your guys do anything important, it’d be nice for me to see it. So if you were to open fire with a flamer and wipe out three of my genestealers, I’d quite like to know why I’m being asked to take them off the board before my turn commences.

Which is why, before either side takes their turn, there’s a delay, as the computer brings everything up-to-date – important actions can be seen on the screen, everything is explained (without a big, long-winded explanation) and the game continues from the point it left off at the end of each turn.

You can see the turn being played out from the previous video (when that video ended recording, I also moved by second Space Marine – any movement performed out of sight of the genestealer player happens automatically; as soon as the character comes into view, however, I’m prompted to pick up and put down the miniatures in the appropriate squares on the board).

In the video, a “pick up” request is indicated by the red arrows (as they point outwards/upwards from the piece on the board) and a “put down” request is indicated by the green arrows. I’m simulating these messages from the hardware by clicking on the screen but when connected to the interactive game board, these messages will be set automatically as you pick up/put down the miniatures on the board.

There are still a few little wrinkles to iron out. But so-far, the game playback system (you can replay a game right from the very start, or simply from the last place you left off) is working really well.

It also means that as well as real-time, online option (which is still possible, even with this system in place) there’s also the possibility of a true turn-based, almost play-by-email option.

And who doesn’t love playing games via email, eh?

What keeps *YOU* awake at night?

For some it’s things like this. Horrible, scary, angry aliens, with big claws and acid for drool. For some of us, it’s more mundane things. Like – what the hell is going on here….

There’s a weird bug and it’s stuff like this that takes aaaaggges to track down and fix. On the face of it, everything is working well. Although I’m playing as the alien team, I’ve loaded up the last turn played and asked the computer to play it through.

As expected, I’m prompted to pick up and then put down each of the first two characters in turn – exactly replicating the movements I made when the turn was recorded.

Then something funny goes on with the last “genestealer” character. He’s decided he’s not going to hang around and wait for me to pick up and put down the miniatures, he’s off on his own.

Until the very last move. Then the app wants me to put the miniature down in its final resting place. But not on any of the squares inbetween. Yet the first two characters worked just fine.

THIS is the kind of nonsense that keeps me awake at night!

I built a sort-of scripting language for replaying turns and whenever one move (into a new square) or one action is complete, I call a function to say “are there any other moves left to do?”

This obviously works, because the third character happily carries them out without prompting. As characters are moved across the board, they constantly check “can anyone see me?”. If a piece is to be moved (or an action, such as firing) is to be carried out by a character that cannot be seen, the computer makes the on-screen avatar invisible and carries out the instruction with no intervention (it doesn’t hang around waiting for you to pick up or put down miniatures on the board, it’s basically “hidden movement”).

As any programmer/engineer will tell you, it’s the intermittent problems that are the worst. I wrote a function into which I pass a destination and a team number and ask it to return true or false to the question “can any of my team see this square?”

It turns out that when the first two genestealers get up and go, the question “can team 2 see the character moving?” is true, because of the third character who remains behind on the starting square.

The problem is, when it’s the last character’s turn to move, nobody else on that team can actually see the moving character (since they’re facing the other way and I’ve already built the field-of-vision system that stops them seeing outside of a 180 degree arc!).

So even through the character is plainly visible on the screen, the computer thinks “well, if nobody can see this guy moving around, why wait for the player to pick up and put down their miniatures, just keep going until he appears in the line-of-sight of another character on team two…..”

Of course, what the function should return is “if you’re player two and the character moving is on team two, you should always be able to see it” – not only would this fix the bug, it’d also mean avoiding having to do lots of (cpu) costly raytracing and line-of-sight calculations.

And that’s the kind of dumb logic puzzle this game has descended into. I’m less fighting against the computer and more against my own facepalm stupidity!

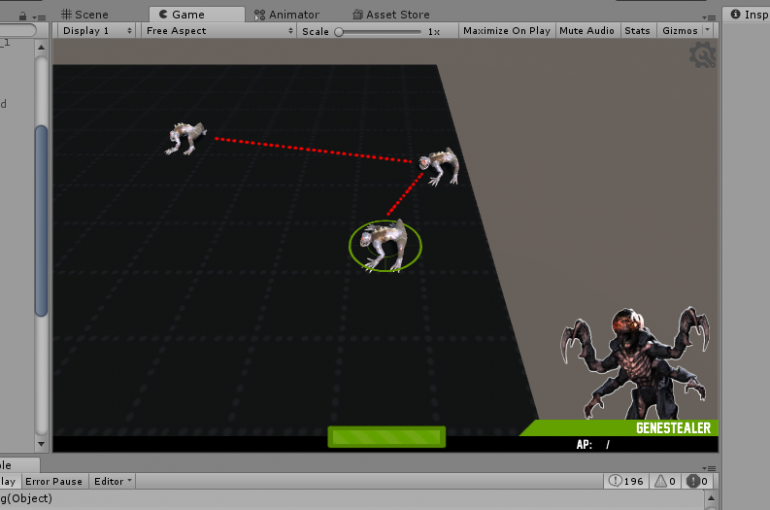

Line-of-sight testing

Line-of-sight is a crucial part of the game/app.

It allows us to have true hidden movement, and does away with the clumsy “blip” tokens which are often used either by the alien player as decoys or by the Space Marine player to avoid areas of a ship during their movement.

With hidden movement, if you can’t see an enemy character, they don’t appear on the board! So we can really add to the atmosphere of the game by implementing “proper” ambush moves, hiding around corners, behind doors and so on…

Here’s a simple line-of-sight test; it’s just one turn, but repeated from “both sides of the board”. To begin with, it’s team one (Space Marines) who have a free choice of movement….

The marine player takes a shot at a genestealer with their first character, then runs away to hide behind the wall. The second player takes a shot and also runs behind the wall. They did a bit of messing about, back and forth, just to demonstrate that they are carrying out other moves, albeit out of sight of the alien player.

We then change the game to the alien players point of view, and replay the turn just completed by the marines player.

As expected, it prompts us to put down the character who is taking a shot (it does this so that when you’re playing on the tabletop with actual real miniatures, it’s clear just who is doing the shooting, without all your focus having to be on the video game playback).

The player is then prompted to pick up the miniature and put it down in the appropriate place. This repeats a few times. Then something funny happens…. the marine player “disappears”.

Of course, they’re not gone from the game – just removed from the alien player’s line-of-sight. So any additional movements are carried out in secret.

This is more easily understood when the second marine moves. During his movement, the marine not only shot at the genestealer, but then ran off behind the wall, and continued to run about in circles, hidden from view. When this is played back from the alien player’s point of view we only actually see the movement up to the point where the character disappears behind the wall.

All the other movement is still played out – just out of sight of the alien player.

When the marine player’s turn ends and the alien player moves their piece(s), the marines remain entirely hidden from view – right up until one of the genestealers can see past the end of the wall.

At this point the app prompts the player to put a miniature down on the appropriate square. When the genestealer steps forward, they are able to see more of the board behind the wall – so the app prompts the player to place the second marine on the board.

Apart from a few glitches with the camera during playback, the line-of-sight routines appear to be working quite well.

So it’s more than likely that I’ll have broken them again by this time tomorrow night, and will yet another thumping headache and yet more hair pulled from my scalp in frustration.

But for tonight, I’m calling it a win!

More coding woes

Not even really “coding woes”. More like compiling and getting the sodding thing running across a variety of devices.

Unity is a multi-platform development environment – allowing you to use the same codebase for PC, Linux, Mac, iOS, Android… even XBox and PSP! But with such versatility comes great complexity.

And I’ve just spend nearly a day and a half just getting the thing to compile for Android – all thanks to automatic updates to the IDE and Google’s recent requirement that all apps to be submitted to the store must target API 26 (Oreo) or above.

I’m not really sure what this means. My own device is running Marshmallow and my Chui H12 tablet running the next OS up (whatever that is, in Android-land). Just a few weeks ago, I’d successfully dropped a new app onto my phone, but that was before Google updated their terms and conditions.

Plus, it turns out there’s a bug in the latest Android Studio for API 26.0.0 – they’ve forgotten to include the apksigner .jar files so you have to manually copy older versions over from existing dev environments.

Not exactly difficult to do – but it’s taken three hours just to find the reason Unity compiles with a “success” message, but nothing appears on the phone!

Feeling the pressure as deadline looms....

It was always going to be a close call – and it’s looking likely that we’re not going to be 100% complete by the end of the week – but there’s a chance we’ll at least have something functional (and able to demonstrate with a cool video, that I’ve been promising for weeks now…)

Anyway, coding is (thankfully) nearing an end, and it’s been really exciting to see miniatures moving about on the tabletop, interacting with the digital avatars, onscreen. It’s still a bit clunky, so not quite ready to post a video yet (I really do hope it’s going to be worth the wait – I’ve give it quite a build up over the last week or so!)

But, of course, it’s not all about writing code.

This was a hobby challenge – and that means painting miniatures. I’ve had some “regular” space marines hanging around in the loft for a few years and, while everyone gets all excited about the new Primaris Marines (me included) I thought I’d take the chance to finish off some guys that have been on the back boiler for a while

It was my first real attempt at edge highlighting and putting a little more effort in than just blobbing two or three different colours on; I started with a really bright blue base coat, whacked on some white then slathered the whole lot with Army Painter Strong Tone.

Then I re-painted over most of the large panels, before hitting the edges with a nice bright blue to make them “pop”; sure, they’re not quite as grimdark as “real” marines, and look more like they’ve fallen out of the pages of a comic book, but they’re do for me….

One day I hope to spend a little more time learning how to paint dingy, realistic looking models. But to get finished in time for the end of this week, bright, clean, cartoon lines are going to have to suffice!

On a completely unrelated note, while testing out my miniatures on the electronic/interactive playing surface, I had a few problems placing the minis in exactly the right place, to trigger the sensor(s).

It’s important that there are “dead spots” on the board – areas where the magnets don’t trigger a sensor – so that as you move the piece across the tabletop, there are definite “no piece present” events; if the sensors were too close together (or the magnets too strong) it’s possible that one sensor might trigger before the previous one had “released”.

As a result, I figured it wouldn’t hurt to paint a simple grid onto the surface of the board.

A simple laser cut template and some grey acrylic did just the job. I don’t care what Warhammer Duncan says, there was no way I was going to thin my paints and paint this with “two thin coats”. Dab, dab, blob blob, and we’re done.

Web based character interface

One of the great things about Space Hulk (or pretty much any small-scale tabletop skirmish game, that requires you to create individual characters) is customising the skills and weapons that your characters use in the game.

As the end-of-November deadline looms, I’m having to cut out all the “niceties” and focus on just the practical functionality of the game.

But that doesn’t mean compromise; I’ve already spent too much time creating onscreen avatars that can be customised, with different appearance, armour and – most importantly – weapons (sure, all but a couple only fire what look like ping-pong balls at the minute, but being able to put a specific character with a specific weapon in a specific place on the board – well, that’s what the game’s all about, surely?)

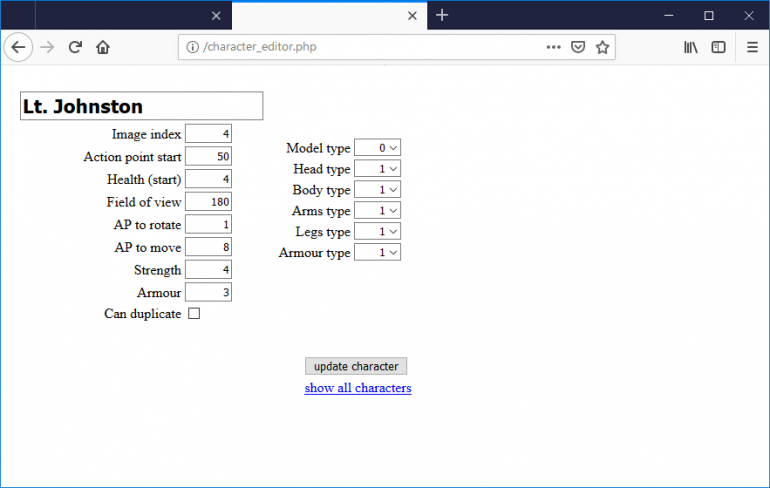

So here’s a simple online editor, where we can rename our characters, assign imagery for them (to display when their miniature is being moved on the tabletop) and select the weapons they use.

Until I’ve fully tested and debugged the app, I’ve deviated slightly from the rules of Space Hulk; I’m using a simple action points system, where every action takes a set number of APs to perform – this is actually the easiest way to make sure that everything is working as it should.

Once I’ve got the movement, line-of-sight and in-game scenery in place, I can replace this arbitary system of assigning numbers to thngs with rules that more closely resemble the actual game of Space Hulk.

Not much to do, then, before Friday……

![How To Paint Moonstone’s Nanny | Goblin King Games [7 Days Early Access]](https://images.beastsofwar.com/2024/12/3CU-Gobin-King-Games-Moonstone-Shades-Nanny-coverimage-225-127.jpg)